Updated, 26 Dec 2018: This issue does not stand still! For most recent updates, see this forum thread.

A common, and reasonable question that we are frequently asked is "why are you asking people to annotate blood vessels instead of just using machines?"

This is a fantastic question and it is a question that underlies the inception of most crowdsourcing and citizen science projects. In conceiving these projects, the reason we look to humans in the first place is because we have exhausted all other possibilities. Indeed, we believe it would be unethical to ask people to spend their time annotating blood vessels if machines could do the job well enough.

Fragment of the data generated at the Schaffer-Nishimura lab. Each vessel segment (outlines) needs to be analysed to identify stalls - cases of blocked blood vessels.

Fragment of the data generated at the Schaffer-Nishimura lab. Each vessel segment (outlines) needs to be analysed to identify stalls - cases of blocked blood vessels.

When Prof. Chris Schaffer at Cornell University first described the data analysis bottleneck they were experiencing in their lab, the first question I asked was "have you tried using a machine to solve this problem?"

For me, this was a key question for several reasons. I spent over four years helping manage a large federal research program in computer vision. Even though I was already versed in various AI methods, this experience gave me broad and deep exposure to the most current methods available today and the latest research into advancing these methods.

Twelve world-class research teams pushed the envelope on machine interpretation of visual data. In this kind of work, it is typical to explore various representations for capturing key qualitative features while reducing the dimensionality of the data (e.g., histogram of gradients, eigenvectors, etc.) as well as various machine-learning approaches that are optimized to visual classification (e.g., neural networks, support vector machines, etc.).

I felt almost certain that some machine-based methods must exist that could perform well on this task. Thus, before investing my own efforts and, ultimately, committing the prospective online labor of thousands of other people who care about vanquishing Alzheimer's disease, I wanted to make sure there was absolutely no other way to crack this nut.

What I learned next, however, was critical. Prof. Schaffer explained that his lab previously experimented with a variety of machine-based methods, ones that other biomedical researchers had applied successfully in similar kinds of visual classification tasks. The best result was only 85% accurate. In order to have valid Alzheimer's research results they need accuracy around 99% (with high specificity and a low tolerance for false negatives).

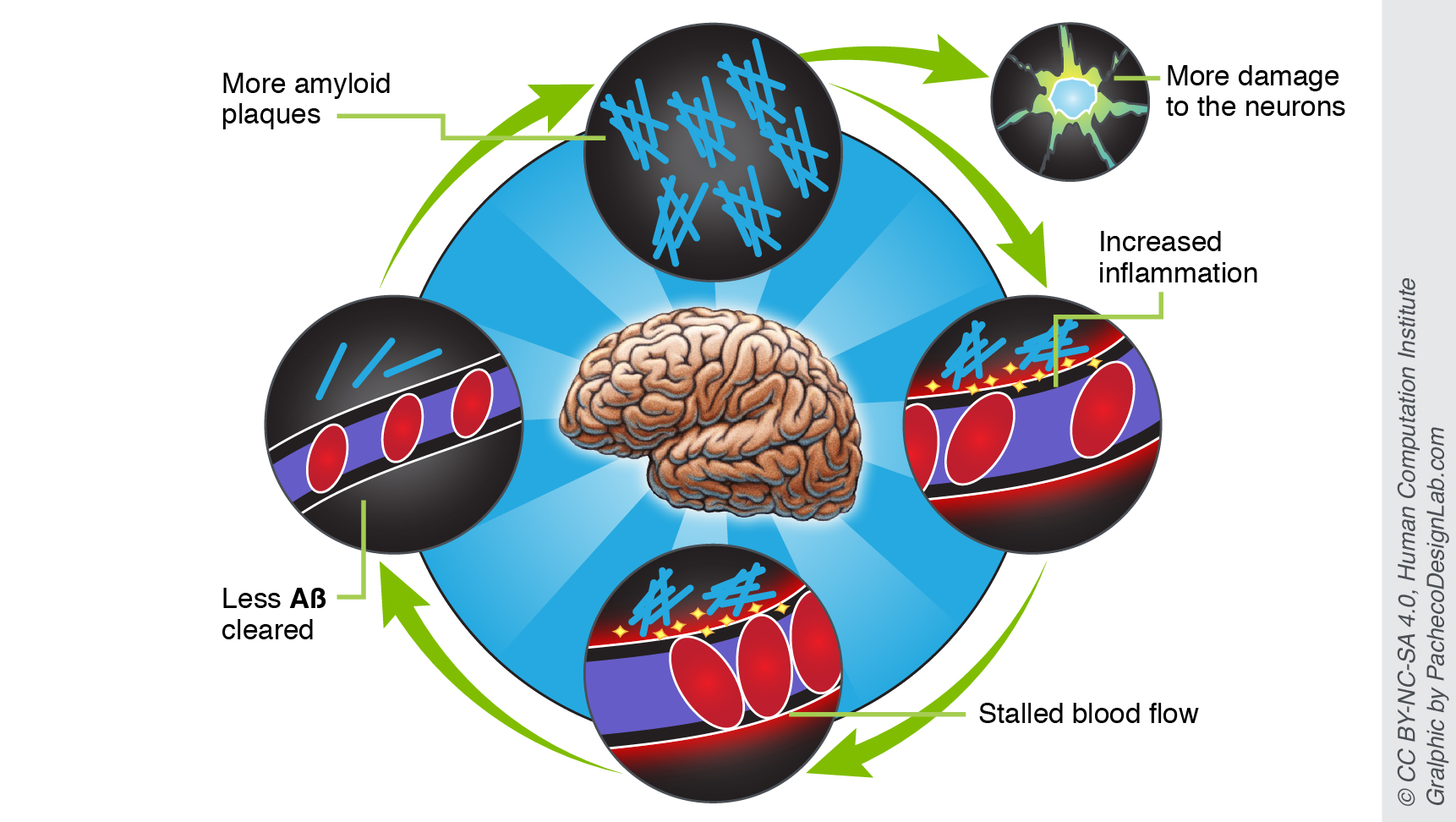

Each stall can have detrimental effects on the brain, which is why it is important the chosen method of analysis is accurate enough, and avoids false negatives.

Each stall can have detrimental effects on the brain, which is why it is important the chosen method of analysis is accurate enough, and avoids false negatives.

Despite these unfavorable results, we continue to experiment with the latest machine-based methods as they become available. Indeed, our Princeton-based collaborators, who have successfully applied machine-learning algorithms to pre-processing data for the EyeWire project, as well as other EyesOnALZ project members at HCI and Cornell, continue to pursue automated vessel classification. In fact, one of our team members, who is passionate about machine learning, has suggested conducting an open challenge for the community of machine learning researchers to test their best methods on our data. Crowdsourcing a solution in this way may be another promising avenue for getting traction on this problem.

Citizen Science is an example of a "Human Computation" system. The basic idea in human computation is that humans have certain strengths that are different, yet complementary to what machines can do. So we seek to find ways to build systems that combine humans, machines, and are technosocial infrastructure (e.g., Internet) to solve problems that human or machines alone can't solve. In this vein, we are also contemplating methods for having machines assign confidence levels to their annotations. In other words, let the machines tell us which ones they can and can't figure out. If this were successful, then we could let the machines give us answers for the 85% they know how to do, and then we humans would have a smaller, but perhaps more challenging set to work with. This could be an effective division of labor and a productive intermediate solution until we have machines that can accurately annotate all vessels.

But why is it that machines fail at this task where humans are able to succeed? Most machine-based methods rely on purely visual information to make their decisions. Even learning systems are limited in the complexity of the patterns they are able to abstract and learn. For example, accurately annotating some vessels requires contextual information that can't be expressed in terms of pixels, such as evaluating the flow of connected vessels and reasoning over their flow dynamics. Humans bring world knowledge, contextual reasoning (e.g. case-based reasoning), and the ability to dynamical infer new representations and build useful abstractions that involve not just visual elements, but conceptual elements. Though we are experimenting with these capabilities in machines today, we are no where near achieving human-like capabilities in these regards.

Another question that often arises is: "but when I play Stall Catchers, I get lots of vessels wrong – I am no where near 99% accurate, in fact my track record is worse than your 85% accurate machine." Today, we are able to achieve near-perfect results using consensus algorithms that combine answers from many different people into one, very accurate crowd-answer. This is sometimes referred to as "crowd wisdom". There is a nice reference to an early example of this at a carnival, when someone realized that when guessing the weight of a person, it was the median guess that was often spot on. Our methods today are more sophisticated and reliable than simply using measures of central tendency. Nonetheless, in our current crowd validation study, our preliminary results already suggest near perfect accuracy using a simple mean. However, using methods that model the cognitive states of our participants, we believe we can achieve higher accuracy while combining fewer annotations for the same vessel. In practice, this means we could substantially increase the value of each annotation that a participant provides and reduce the number of annotations needed to get an accurate crowd answer.

By using human computation methods, we can make sure that we get the best "crowd-answer" that is as accurate as that of trained experts.

By using human computation methods, we can make sure that we get the best "crowd-answer" that is as accurate as that of trained experts.

We hope someday that machines will prevail at catching stalls so we no longer need to rely on human eyes to solve this problem. In the meantime we are fortunate to have powerful methods for employing crowd-based analysis, and even luckier to have a growing community of dedicated participants who care deeply about accelerating a treatment for Alzheimer's disease and are willing to generously donate their time to doing exactly that.