Early peek at the #Dreamathon research results 📈 📊

We just took a first peek at the image labels you provided during the Dreamathon and wanted to circle back with some preliminary results!

But first, some participation stats...

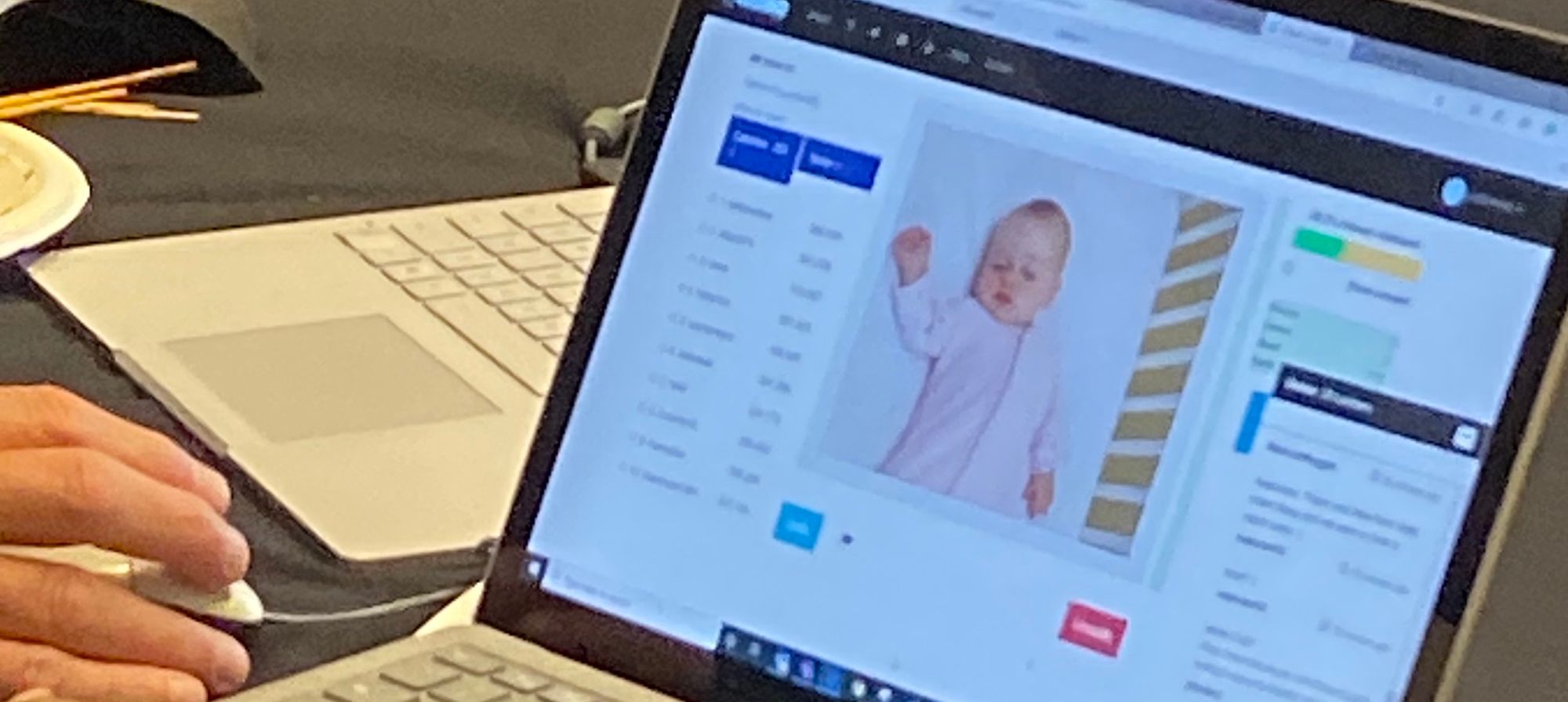

259 volunteers from around the U.S. and beyond participated in the inaugural Dreamathon by playing Dream Catchers, a new citizen science platform that advances SIDS prevention research. (Dream Catchers was spun off from Stall Catchers, which has been contributing to Alzheimer's disease research for over three years.)

Dream Catchers was launched on October 24, 2019. During the first 24 hours of going live, online volunteers made history by providing 32,442 safe/unsafe image labels in the first crowdsourced analysis of SIDS research data. Of these, 5,941 labels were provided in the final hour by the on-site participants at the Dreamathon’s HQ on the Microsoft campus in Redmond, WA. On average, each participant labeled 115 images, and the most labels provided by any one participant was 2,382!

Did folks enjoy playing Dream Catchers? Here's what one catcher had to say on the live chat...

super cool. super fun

and someone else just enjoyed the scenery...

I love looking at babies!

We agree. There is something therapeutic about looking at sleeping babies!

Before we dig into our initial findings, let me start with the usual reminder that all research is uncertain! 😅 At this point we are just taking an initial look at how much your labels agree with the experts on the training images (the ones where you got a “correct” or “incorrect” answer).

So while you read the below, please keep in mind that these results are not final, they are based only on the training images and can actually change substantially after we look at the entire dataset. On the other hand, this process mirrors exactly what scientists often do in the laboratory when they take an early snapshot to see if the results are moving in the expected direction.

What was the research question?

The research question for the Dreamathon dataset was whether or not untrained public volunteers can identify risks in sleep environment images as well as experts by playing Dream Catchers.

So how do the results look so far?

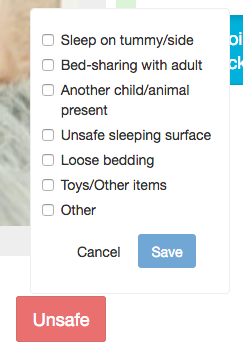

Not too shabby. In Dream Catchers, when an image is labeled as “unsafe”, we give catchers a chance to specify the ways in which it is unsafe.

But in our initial analysis we are only looking at the basic distinction of safe vs unsafe. Here’s what we found: Individuals tend to agree with the experts about 92% of the time. That’s not good enough for the research - we need at least 99% agreement. So we use “wisdom of crowd” methods, in which we combine answers about the same image from many people.

For our quick look, we used a very basic method of doing this where we just took the average crowd answer for each image. Using this rudimentary approach, we discovered that the crowd answers agreed with the experts 99% of the time! Indeed, there were only two images where the crowd disagreed with the experts. So we looked at those images to see what might be going on. In the first image, the crowd said “unsafe” and the experts had said “safe”. Here’s the image:

This is clearly unsafe because the infant is sleeping on her side instead of on her back. So it looks like either the experts made mistake or there was some kind of transcription error. Score 1 for the crowd and 0 for the experts!

In the second case where there was disagreement between the crowd and experts, the situation was a bit different. Here’s the image:

In this case, it’s a bit unclear what is going on. It looks like either the infant could be lying on his side (unsafe), or he could by lying on his back (safe) and have his head turned sideways. The experts said “unsafe”, but the crowd average was 48% unsafe. In other words, 48% of the crowd agreed with the experts and 52% did not. And because, for our quick and dirty method we used a cutoff of 50%, in this case we decided the crowd disagreed.

In or more in-depth analysis, however, we will apply a more sophisticated method of determining the crowd answer, which gives more weight to participants who tend to demonstrate better sensitivity to risk factors. With this new method, and accounting for expert mistakes, as we saw with the first image, we might see 100% agreement between the crowd answers and expert answers.

In any case, this initial view of the Dreamathon data looks quite promising!

What’s next?

Next, we will look at the full validation set, which includes not just the training images, but also 584 additional research images that were labeled during the Dreamathon. The first thing we will do is the safe/unsafe analysis as we did above, but using our more sophisticated wisdom of crowd methods. Then we will look at each sleep environment risk factor separately, so we can see if people have more difficulty noticing some risk factors more than others.

Finally, we will calibrate Dream Catchers so that it operates with the greatest possible efficiency and does not waste anyone’s precious time labeling the images. To do this, we will determine the minimum number of labels we need to collect per image in order to reliably agree with the experts. Once that is done, you and other catchers can start cranking through datasets full of new infant sleep environment images.

That's it so far! Thanks again to everyone who contributed to the SIDS image analysis thus far 💜 And please keep catching! :)

In our next post with the final validation results, we’ll explain in greater detail how your future image labeling on Dream Catchers will be used to help prevent SIDS.